■ Gaze Estimation and Tracking

■ 3-D Modeling of Human Face from 2-D Images

■ Multimodal Face Recognition with Visible and Infrared Imaging

■ Hyperspectral Image Analysis for Medical Diagnosis and Target Detection

■ Forensic Imaging and Analysis

■ Signal Restoration in Terahertz Imaging and Spectroscopy

■ Evolvable Block-based Neural Networks for ECG Signal Monitoring

This work estimates the gaze point of a person using the pupil center and head pose angles for human-computer interaction. Gaze estimation refers to determining where a person is looking on the computer monitor screen through visual analysis of the human face in a video taken from a camera mounted on the monitor. Applications of gaze estimation include hands-free control of the mouse cursor, human-computer interaction in video gaming, estimating the preferences of consumers on a certain product in marketing survey, monitoring the alertness level of a vehicle driver, and diagnosis of a patient with a psychological disorder having difficulty to effectively communicate. Many existing gaze estimation approaches require active illumination devices such as infrared (IR) LEDs, which can limit operating distance of the gaze tracker and prevent from working in outdoor environments. The proposed method combines the pupil center and head pose angles to determine the gaze point in real time with an off-the-shelf camera with no IR illuminators. The pupil centers are detected in every frame from a face object in a live video stream. Head pose angles are estimated by matching the users detected facial features to those projected onto a generic 3-D head model. The gaze point is expressed as a linear combination of the pupil center and head pose angles, which can be solved by a linear regression model.

Related

Publications:

■

R.

Oyini Mbouna, S. G. Kong, and M. G. Chun, Visual Analysis of Eye State and Head Pose for Driver

Alertness Monitoring,

IEEE Transactions on Intelligent

Transportation Systems, Vol. 14, No. 3, pp.1462-1469, September 2013.

■

R. Oyini Mbouna and S. G. Kong, Pupil Center Detection with a

Single Webcam for Gaze Tracking, Journal of Measurement Science and

Instrumentation, Vol. 3, No. 2, pp.133-136, June 2012.

■ I. S. Kim, H. S.

Choi, K. M. Yi, J. Y. Choi, and S. G. Kong, Intelligent Visual Surveillance A Survey, International Journal of Control,

Automation, and Systems, Vol. 8, No. 5, pp.926-939, October 2010.

3-D Modeling of Human Face from 2-D Images

3-D Modeling of Human Face from 2-D Images

Since a human face

is essentially a 3-D object, changes in head pose along with illumination

variations decrease the performance of face recognition significantly. The use

a 3-D model of a human face has promised robust face recognition invariant to

pose and lighting. The 3-D structure of a persons facial surface does not

change over time. This research estimates head pose angles and 3-D depth

information from a 2-D query face image using a reference 3-D face model of the

same gender and ethnicity as those of a query image. The depth information is

obtained by minimizing the disparity between a set of facial feature points on

2-D face and the 2-D projection of corresponding feature points on the 3-D

reference face model. The resulting 3-D face model is compared with a ground-truth

3-D face model canned using a Kinect sensor. The 3-D objects obtained are

printed using a 3-D printer to confirm the accuracy of 3-D shape.

Since a human face

is essentially a 3-D object, changes in head pose along with illumination

variations decrease the performance of face recognition significantly. The use

a 3-D model of a human face has promised robust face recognition invariant to

pose and lighting. The 3-D structure of a persons facial surface does not

change over time. This research estimates head pose angles and 3-D depth

information from a 2-D query face image using a reference 3-D face model of the

same gender and ethnicity as those of a query image. The depth information is

obtained by minimizing the disparity between a set of facial feature points on

2-D face and the 2-D projection of corresponding feature points on the 3-D

reference face model. The resulting 3-D face model is compared with a ground-truth

3-D face model canned using a Kinect sensor. The 3-D objects obtained are

printed using a 3-D printer to confirm the accuracy of 3-D shape.

Related

Publications:

■

S.

G. Kong and R. Oyini Mbouna, Head Pose Estimation

From a 2D Face Image Using 3D Face Morphing with Depth Parameters, IEEE Transactions on Image Processing, Vol.

24, No. 6, pp.1801-1808, June 2015.

Multimodal Face

Recognition with Visible and Infrared Imaging

Multimodal Face

Recognition with Visible and Infrared Imaging

This work finds an adaptive data fusion technique of visible and

thermal infrared (IR) images

for robust face recognition regardless of illumination variations, partially

supported by the Office of Naval Research. The combined use of visible and thermal IR image sensors offers a

viable means for improving the performance of face recognition techniques based on

single imaging modalities. Visual imaging demonstrates difficulty in

recognizing the faces in low illumination conditions. Thermal IR sensor

measures energy radiation from the object, which is less sensitive to

illumination changes and operable in darkness. Data fusion of visible and

thermal images can reproduce face images robust to illumination variations.

However, thermal face images with eyeglasses may fail to provide useful

information around the eyes since glass blocks a large portion of thermal

energy. Adaptive data

fusion detects the presence of eyeglasses to enhance the quality of

visual-thermal image fusion in terms of information content for robust face

recognition.

Related

Publications:

■ I. S. Kim, H. S.

Choi, K. M. Yi, J. Y. Choi, and S. G. Kong, Intelligent Visual Surveillance A Survey,

International Journal of Control,

Automation, and Systems, Vol. 8, No. 5, pp.926-939, October 2010.

■ S. G. Kong, J. Heo, F. Boughorbel, Y. Zheng, B. R. Abidi, A. Koschan, M. Yi, and M. A. Abidi, Multi-scale Fusion of Visible and Thermal IR Images for Illumination-Invariant Face Recognition, International Journal of Computer Vision, Vol. 71, No. 2, pp.215-233, Feb. 2007.

■ S. Moon and S. G. Kong, Adaptive

Fusion of Visible and Thermal Images based on Multiscale Analysis for Face

Recognition, Proc. IEEE Intl Conf. on Computational Intelligence for

Homeland Security and Personal Safety (CIHSPS06), Alexandria, VA, Oct.

2006.

■ S. G. Kong, J. Heo,

B. R. Abidi, J. Paik, and M. A. Abidi, Recent Advances in Visual and Infrared Face

Recognition - A Review, Computer Vision and Image Understanding,

Vol. 97, No. 1, pp.103-135, January 2005. (Most

Cited Paper Award)

■ J. Heo, S. G. Kong, B. Abidi, and M. Abidi,

Fusion of Visual and Thermal Signatures

with Eyeglass Removal for Robust Face Recognition, Proc. Workshop on

Object Tracking and Classification Beyond the Visible Spectrum (OTCBVS04),

Washington, DC, July 2004. (Best Paper Award)

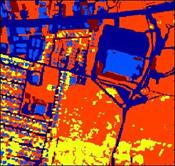

Hyperspectral

Image Analysis for Medical Diagnosis and Target Detection

Hyperspectral

Image Analysis for Medical Diagnosis and Target Detection

This research aims

at developing hyperspectral imaging and signature classification techniques for

real-time, non-invasive diagnosis of skin cancers. The application focus

involves the study and correlation of reflectance/fluorescence image signals

with neoplastic properties of normal and tumor tissues. A technical innovation

of this project is the combination of an advanced computing algorithm based on

the support vector machine with a real-time hyperspectral imaging system for

detecting small differences in reflectance and fluorescence profiles of normal

and malignant tumor tissues. This approach leads to significant advances in

effective and rapid detection of tumors over large areas of organs and in the

understanding of cancer in general. The developed skin cancer detection

technique will be translatable to other diseases in other organ sites as well

changing the future of diagnostic medicine. Early phase of this research has

been successfully applied to the detection of skin tumors on poultry carcasses

for food safety inspection.

Related

Publications:

■

Y.

Zhao, X. Wu, S. G. Kong, and L. Zhang, Joint

Segmentation and Pairing of Multispectral Chromosome Images, Pattern Analysis and Applications, Vol.

16, Issue 4, pp.497-506, November 2013.

■

Y.

Zhao and S. G. Kong, Automated Classification

of Touching or Overlapping M-FISH Chromosomes by Region Fusion and Homolog

Pairing, Pattern Analysis and

Applications, Vol. 16, Issue 1, pp.31-39, February 2013.

■ Z. Du, Y. Jeong, M.

K. Jeong, and S. G. Kong, Multidimensional

Local Spatial Autocorrelation Measure for Integrating Spatial and Spectral

Information in Hyperspectral Image Band Selection, Applied Intelligence, Vol. 36, Issue 3, pp.542-552, April 2012.

■ Y. Zhao, L. Zhang,

and S. G. Kong, Band Subset Based

Clustering and Fusion for Hyperspectral Imagery Classification, IEEE Transactions on Geoscience and Remote

Sensing, Vol. 49, No. 2, pp.747-756, February 2011.

■

Z.

Du, M. K. Jeong, and S. G. Kong, Band

Selection of Hyperspectral Images for Automatic Detection of Poultry Skin

Tumors, IEEE Transactions on Automation Science and Engineering,

Vol. 4, No. 3, pp.332-339, 2007.

■

S.

G. Kong, M. Martin, and T. Vo-Dinh, Hyper-spectral Fluorescence Imaging for

Mouse Skin Tumor Detection, ETRI Journal, Vol. 28, No. 6,

pp.770-776, December 2006

■

S.

G. Kong, Z. Du, M. Martin, and T. Vo-Dinh, Hyper-spectral

Fluorescence Image Analysis for Use in Medical Analysis, Proc. of SPIE

Conf. on Biomedical Optics, San Jose, CA, 2005.

■

I.

Kim, Y. R. Chen, M. S. Kim, and S. G. Kong, Detection of Skin Tumors on Chicken

Carcasses using Hyperspectral Fluorescence Imaging, Transactions of the

American Society of Agricultural Engineers, Vol. 47, No. 5, pp.1785-1792,

2004. (Honorable

Mention Paper Award)

■

S.

G. Kong, Y. R. Chen, I. Kim, and M. S. Kim, Analysis of

Hyperspectral Fluorescence Images for Poultry Skin Tumor Inspection, Applied

Optics, Vol. 43, No. 4, pp.824-833, February 2004.

Forensic imaging provides investigative images, photographic processing,

and visual analysis to find evidences for investigation by law enforcement

agencies. Research focuses are imaging techniques in challenging environments,

enhancement, restoration from degraded images, video analysis, authentication,

evaluation, and latent fingerprint examination. Forged seal detection finds a

computational procedure to verify the authenticity of a seal impression

imprinted on a document based on the seal overlay metric, which is defined as the ratio of an effective seal impression pattern

and the noise in the neighborhood of the reference seal impression region.

Detection of transcribed seal impressions use a 3-D scanner to generate a 3-D

pressure trace map to detect forged seal impressions transferred from a genuine

document to a target document using transcription media. Sequence

discrimination of heterogeneous crossing of seal impressions discriminates the

sequence of stamped seal impressions and ink-printed text in a document to

detect falsely signed documents. Invisible ink detection reveals invisible ink

patterns in the visible spectrum without the aid of special equipment such as

UV lighting or IR filters using absorption difference. Frame-based recovery of

corrupted video files hep recover corrupted video frames based on video frames.

Related

Publications:

■

J.

Lee, S. G. Kong, T. Y. Kang, and B. Kim, Invisible

Ink Mark Detection in the Visible Spectrum using Absorption Difference, Forensic Science International, Vol.

236, pp.77-83, March 2014.

■

M.

G. Chun and S. G. Kong, Focusing in

Thermal Imagery using Morphological Gradient Operator, Pattern Recognition Letters, Vol. 38,

Issue 4, pp.20-25, March 2014.

■

G.

Na, K. Shim, G. Moon, S. G. Kong, E. Kim, and J. Lee, Frame-based Recovery of Corrupted Video Files

using Video Codec Specifications, IEEE

Transactions on Image Processing, Vol. 23, No. 2, pp.517-526, February

2014.

■ K. Y. Lee, J. Lee,

S. G. Kong, and B. Kim, Sequence

Discrimination of Heterogeneous Crossing of Seal Impression and Ink-printed

Text using Adhesive Tapes, Forensic

Science International, Vol. 234, pp.120-125, January 2014.

■

J.

Lee, S. G. Kong, Y. Lee, J. Kim, and N. Jung, Detection of Transcribed Seal Impressions

using 3-D Pressure Traces, Journal

of Forensic Sciences, Vol. 57, Issue 6, pp.1531-1536, November 2012.

■ J. Lee, S. G. Kong, Y. Lee, K. Moon, O. Jeon, J. H. Han, B.

Lee, and J. Seo, Forged Seal Detection

based on Seal Overlay Metric, Forensic

Science International, Vol. 214, Issue 1, pp.200-206, January 2012.

■ C. Ryu, S. G. Kong,

and H. Kim, Enhancement of Feature

Extraction for Low-Quality Fingerprint Images using Stochastic Resonance, Pattern Recognition Letters, Vol. 32,

Issue 2, pp.107-113, January 2011.

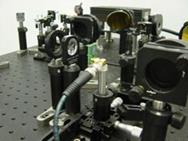

Signal Restoration in Terahertz Imaging and

Spectroscopy

Signal Restoration in Terahertz Imaging and

Spectroscopy

Related

Publications:

■ C. Ryu and S. G. Kong, Boosting Terahertz Radiation in THz-TDS using Continuous-Wave Laser, Electronics Letters, Vol.

46, No. 5, pp.359-360, March 4, 2010.

■ C. Ryu and S. G. Kong, Atmospheric Degradation Correction of Terahertz Beams using Multiscale

Signal Restoration, Applied Optics,

Vol. 49, No. 5, pp.927-935, February 2010.

■ S. G. Kong and D. H. Wu, "Signal Restoration from Atmospheric Degradation in

Terahertz Spectroscopy," Journal of Applied Physics, Vol. 103,

No. 11, 113105 (6 pages), June 2008.

■ S. G. Kong and D.

H. Wu, Terahertz Time-Domain Spectroscopy

for Explosive Trace Detection, Proc. IEEE Intl Conf. on

Computational Intelligence for Homeland Security and Personal Safety

(CIHSPS06), Alexandria, VA, Oct. 2006.

Evolvable Block-based

Neural Networks for ECG Signal Monitoring

Evolvable Block-based

Neural Networks for ECG Signal Monitoring

This work aims at

developing evolvable neural networks that reconfigure their structures and

connection weights autonomously in dynamic operating environments. The

block-based neural network model consist of a 2-D array of basic blocks that

can modify network structure and connection weights using evolutionary

algorithms to be implemented on reconfigurable digital hardware. Block-based

neural networks demonstrated the potential for analyzing electrocardiogram

(ECG) signals to monitor human health conditions online that are insensitive to

variations over individuals, time of day, and under different body conditions.

People working in dangerous environments (e.g. military personnel, firemen, and

truck drivers) as well as older people will benefit from constant monitoring of

their health conditions for prediction of various dangerous states such as

detection of losing consciousness and heart infarct.

Related

Publications:

■ W. Jiang and S. G. Kong, Block-based Neural Networks for Personalized ECG Signal Classification, IEEE Transactions on Neural Networks, Vol. 18, No. 6,

pp.1750-1761, November 2007.

■ W. Jiang and S. G. Kong, A

Least-Squares Learning for Block-based Neural Networks, Dynamics

of Continuous, Discrete and Impulsive Systems, Vol. 14, No. S1, pp.242-247,

2007.

■ S. Merchant, G. D. Peterson, S. Park, and S. G.

Kong, FPGA Implementation of Evolvable Block-based Neural Networks, Proc. Congress on Evolutionary Computation,

Vancouver, July 2006.

■ W. Jiang, S. G. Kong, and G. Peterson, ECG

Signal Classification using Block-based Neural Networks, Proc. International Joint Conf. on Neural

Networks, Montreal, Canada, 2005.

■ S. W. Moon and S.

G. Kong, Block-based Neural Networks, IEEE

Transactions on Neural Networks, Vol. 12, No. 2, pp.307-317, March 2001.